ClioSport.net

-

When you purchase through links on our site, we may earn an affiliate commission. Read more here.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The Power PC Thread [f*ck off consoles]

- Thread starter Munson

- Start date

Clio 182

Stuff can just die, but you're probably going to end up upgrading long before that point imo. If the PC plays the games you like, at the resolution you want, at a frame rate that you find acceptable then don't bother upgrading.Last couple years been meaning to properly look at a new pc, kinda put off to stuff being in low demand and price. My current pc that i got custom from Overclockers is now 6 years old, there's nothing that i've replaced on it that i can recall. What's the tell tale signs of a pc starting to go due to age, or does it not work like that, i presume it's 1 thing that goes at a time. Just wondering how far can i go before one day i want to turn on my pc and it wont boot up? Is it time to spend -£1000 on a new pc?

charltjr

ClioSport Club Member

It will either just stop working one day or you’ll start to get frequent crashing/reboots/display corruption.

Honestly I wouldn’t worry about it, if it’s still doing everything you need then crack on.

You might want to consider refreshing the thermal paste at some point if cpu or GPU temps start getting too high, also check the SMART data on any hard disks or SSDs to make sure they’re not racking up lots of failures.

IMO everyone should make sure they have a backup of anything they don‘t want to lose, but that applies as much to a new pc as an older one.

Honestly I wouldn’t worry about it, if it’s still doing everything you need then crack on.

You might want to consider refreshing the thermal paste at some point if cpu or GPU temps start getting too high, also check the SMART data on any hard disks or SSDs to make sure they’re not racking up lots of failures.

IMO everyone should make sure they have a backup of anything they don‘t want to lose, but that applies as much to a new pc as an older one.

Geddes

ClioSport Club Member

Fiesta Mk8 ST-3

I’ve mentioned a couple times I’ve been getting the blue screen of death I’ve had them probably about 4 or 5 times now past few months. I wouldn’t know where to begin putting on thermal paste my self like. Yeah I’ve been aware of backing up files and that on hard drives just in case.

I do have a SSD and a hard drive on my current pc but I don’t know how to transfer storage between each other

I do have a SSD and a hard drive on my current pc but I don’t know how to transfer storage between each other

Jonnio

ClioSport Club Member

Punto HGT Abarth

Simplest thing would be to just save everything important to you in a single folder, then copy and paste it to another drive every now and then.

I've been using Freefilesync and saved a couple of transfer setups that just does it automatically when I double click them.

I've been using Freefilesync and saved a couple of transfer setups that just does it automatically when I double click them.

jenic

ClioSport Club Member

I’ve been offered a pc from a friend who is selling up, I wondered what a fair price would be for below?

It’s an AWD-IT system from Costco:

ASUS ROG Strix B550-F Motherboard

16GB RAM

AMD Ryzen 5 5600x 6-core

NVIDIA GeForcee RTX 3070 ASUS Dual 8GB

Samsung Evo 870 1TB NVME Boot Drive

1TB Sata SSD

2TB Sata Disk

AIO CPU cooler

It’s an AWD-IT system from Costco:

ASUS ROG Strix B550-F Motherboard

16GB RAM

AMD Ryzen 5 5600x 6-core

NVIDIA GeForcee RTX 3070 ASUS Dual 8GB

Samsung Evo 870 1TB NVME Boot Drive

1TB Sata SSD

2TB Sata Disk

AIO CPU cooler

Maccy

ClioSport Club Member

Straight 6

Depending on the memory speed, I'd guess around £7-800?I’ve been offered a pc from a friend who is selling up, I wondered what a fair price would be for below?

It’s an AWD-IT system from Costco:

ASUS ROG Strix B550-F Motherboard

16GB RAM

AMD Ryzen 5 5600x 6-core

NVIDIA GeForcee RTX 3070 ASUS Dual 8GB

Samsung Evo 870 1TB NVME Boot Drive

1TB Sata SSD

2TB Sata Disk

AIO CPU cooler

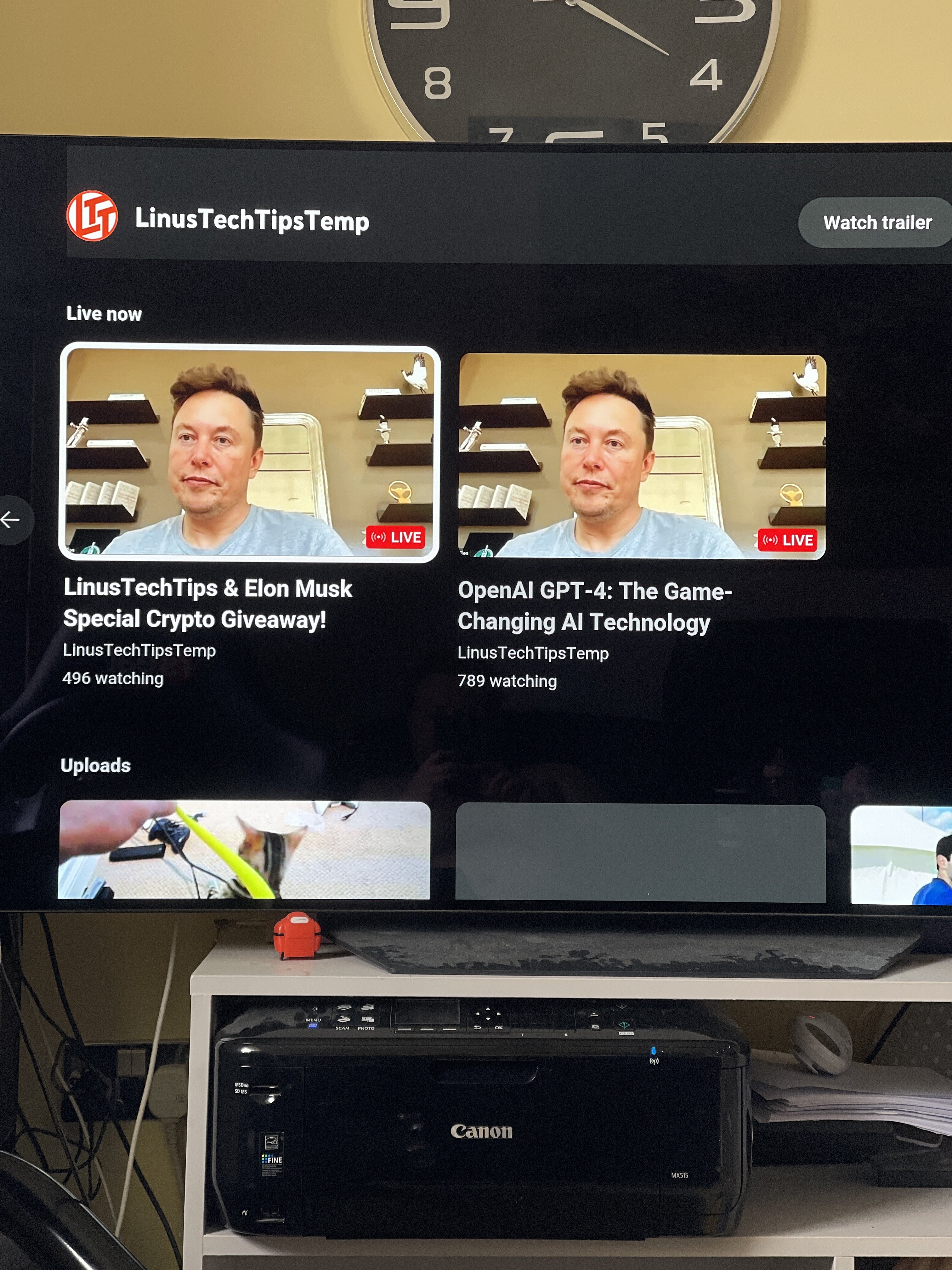

Lol rekt. Literally every video on LTT is being reuploaded, time to unsubscribe for a few days 😂

Smart that

N0ddie

ClioSport Club Member

Tesla Model 3

Where’s the PC bud?

Ray Gin

ClioSport Club Member

Cupra Leon & Impreza

LolsWhere’s the PC bud?

It will be sat on that desk come the weekend. Hopefully...

1 year after owning my PC near enough finally getting myself a gaming keyboard. Not sure I'll actually really use it for gaming so much will definitely give it a crack just to try something different. Going to get the wireless hyperx RGB mouse to go along with it too 😅

uk.hyperx.com

uk.hyperx.com

HyperX Alloy Origins Core PBT – Mechanical Gaming Keyboard

The HyperX Alloy Origins is a mechanical gaming keyboard with a compact design and customizable a RGB keys colors. The addition of HyperX PBT keycaps makes it even more durable.

Last edited:

R3k1355

ClioSport Club Member

Remember that 6900XT you linked for me @ £700 back in January?

Click the link now. 😲 😲 😲 😲

Darren S

ClioSport Club Member

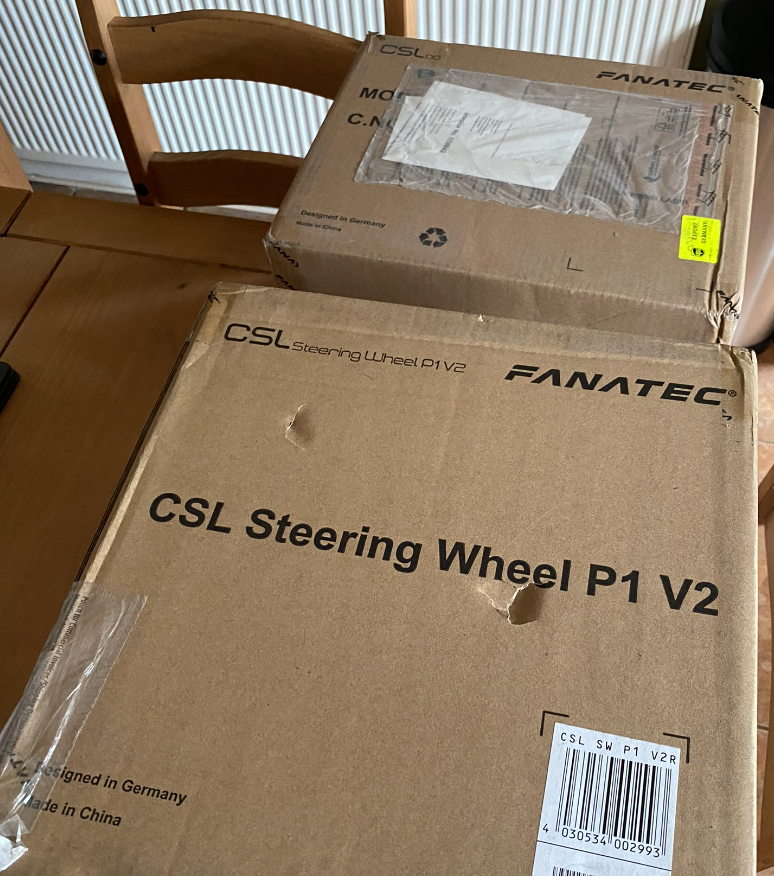

Three boxes of Fanatec loveliness landed today.... looking forward to setting it up!

Darren S

ClioSport Club Member

Finally got this setup on Monday - still no cable management done yet, so that will be a weekend job. Not a particularly great thing to get into the seat and having to slide my feet around dangling cables.Three boxes of Fanatec loveliness landed today.... looking forward to setting it up!

View attachment 1640543

Is it worth it? I'm glad to say - yes. It's clearly a league up from my previous Logitech kit and actually 'feels' more weighty. The Fanatec software is a great one-stop-shop for all of the peripherals - wheelbase, wheel, pedals and shifter - though strangely the pedal set cannot check for updates without directly being connected to the PC via USB.

The wheelbase acts as a hub from which all other accessories connect to. It can even have a handbrake peripheral connected to it - just in case I preferred rally or drifting games. This makes for far less demand upon the USB ports on the PC in only now requiring a single one, as previously I needed one port for each - the Logitech wheel, Fanatec pedals and Fanatec shifter.

One thing that I have to completely slate Fanatec for however, is the lack of pre-packed sundries within the packaging. They give you no M6 or M8 bolts at all - and for the price of the damned thing, I'm surprised at just how inexpensive chucking a small pack of mixed nuts and bolts into the box, would have been. Definitely a thumbs down for that.

My entry-level unit kicks out up to 5Nm of torque through the wheel. This I find is more than ample to fight with when the front end of the car disagrees with your intentions. Quite how the top flight units with 30Nm+ compare is beyond me. You want a driving session, not a full-blown workout - or worse, broken wrists.

I'm finding whilst working from home, that I actually want to have a quick go of it now during my dinner-hour. Hopefully that novelty feeling won't wear off too quickly and that I'll still be having a lot of fun with it. I was on there just an hour ago in ACC with a Alpine A110 in the GT4 class at Donington for a quick 15mins race. I span out on the first lap when I overcooked it coming down the Craner Curves into the Old Hairpin and came a woeful last - including a slapped wrist for ignoring blue flag warnings for the two GT3 class cars lapping me.

All good fun. Not quite sure I'm ready for the chaos of online racing just yet though.

charltjr

ClioSport Club Member

Slightly random question, I have a one of those little NUC-a-like tiny form factor PCs with a mighty two core four thread i3 in it and I was thinking it would make a nice little retro emulation system.

It's been a while since I properly looked into anything like this and things have obviously moved on a hell of a long way since early builds of MAME and individual emulators. Anyone run something similar? RetroArch looks like a good choice?

Used to be that getting hold of huge dumps of old games was pretty easy but looks like there's a ton of blatant malware carp to wade through now, anyone got a decent source for the games/roms?

It's been a while since I properly looked into anything like this and things have obviously moved on a hell of a long way since early builds of MAME and individual emulators. Anyone run something similar? RetroArch looks like a good choice?

Used to be that getting hold of huge dumps of old games was pretty easy but looks like there's a ton of blatant malware carp to wade through now, anyone got a decent source for the games/roms?

charltjr

ClioSport Club Member

Should have said, I'm planning to have it hooked to a TV and use a Stadia controller for input so something that retains button mappings etc would be excellent. There will be a wireless keyboard attached too, but ideally as controller-centric as possible.

N0ddie

ClioSport Club Member

Tesla Model 3

Get into it bud. Grab yourself a cheap "new membership" for iRacing and you'll be hooked. ACC's online is soulless in comparison.All good fun. Not quite sure I'm ready for the chaos of online racing just yet though.

Darren S

ClioSport Club Member

A good explanation of VRAM usage in current games for us mere knuckle draggers. Probably the first day of school in comparison, for the likes of Andy @SharkyUK

https://www.techspot.com/article/2670-vram-use-games/?fbclid=IwAR2OXmN4bXmBNFOCBJLy_v_dH1TJNWdqJZe5_iNEHaf-DJFpgp_OUynica4

https://www.techspot.com/article/2670-vram-use-games/?fbclid=IwAR2OXmN4bXmBNFOCBJLy_v_dH1TJNWdqJZe5_iNEHaf-DJFpgp_OUynica4

Grandpa Joe

ClioSport Club Member

At 4k with everything maxed I regularly see 14-15gb of VRAM usage. That's why cards targeted for 4k gaming come with sufficient VRAM.

Cards for 1440P have less, cards for 1080P have less again. Nothing new.

Cards for 1440P have less, cards for 1080P have less again. Nothing new.

Darren S

ClioSport Club Member

I think what's tended to shift in recent times though, is it's not always resolution that kicks VRAM in the nads.At 4k with everything maxed I regularly see 14-15gb of VRAM usage. That's why cards targeted for 4k gaming come with sufficient VRAM.

Cards for 1440P have less, cards for 1080P have less again. Nothing new.

For the vast majority of times, the rule of 'more res = more VRAM required' is definitely true. But with more fidelity and fancy effects being applied, those can hog memory just a bad - especially if poorly optimised.

I'd like to know what happens with the other end of hardware though - and how well games are aware of available VRAM and whether or not they utilise it? So, the likes of The Last of Us utilising circa 12GB of VRAM - if played on a 4090Ti, does it 'see' that it has double that to play with and take advantage of that fact?

Grandpa Joe

ClioSport Club Member

Sometimes you can adjust the maximum available to the game in the game settings itself, other times you need to edit a config file for the game (you can do this for the number of CPU cores used too).

If you run out of VRAM I'm not sure if shared memory is still a thing and whether it can be used with dedicated graphics cards or not. I think it can but not sure on the ins and outs of implementing it correctly.

If you run out of VRAM I'm not sure if shared memory is still a thing and whether it can be used with dedicated graphics cards or not. I think it can but not sure on the ins and outs of implementing it correctly.

Clio 182

In short, yes. The RAM usage shown by monitoring software like GPU-Z or Afterburner simply shows the amount of VRAM the game is requesting. There is absolutely a minimum amount that a game will need in order to run, especially for increased resolutions and textures etc, but as more becomes available the amount requested also increases. When people are seeing all the VRAM on their 4090 being "used" that doesn't mean that the game necessarily needs or is using that amount, it's just how much it has requested.f played on a 4090Ti, does it 'see' that it has double that to play with and take advantage of that fact?

SharkyUK

ClioSport Club Member

A good explanation of VRAM usage in current games for us mere knuckle draggers. Probably the first day of school in comparison, for the likes of Andy @SharkyUK

🤓 Mooar GDDRz. That's my knowledge exhausted. 😛

I think what's tended to shift in recent times though, is it's not always resolution that kicks VRAM in the nads.

For the vast majority of times, the rule of 'more res = more VRAM required' is definitely true. But with more fidelity and fancy effects being applied, those can hog memory just a bad - especially if poorly optimised.

I'd like to know what happens with the other end of hardware though - and how well games are aware of available VRAM and whether or not they utilise it? So, the likes of The Last of Us utilising circa 12GB of VRAM - if played on a 4090Ti, does it 'see' that it has double that to play with and take advantage of that fact?

It's a bit of a pain in the ass when it comes to memory management, especially on PC where you effectively have to have two copies of data; one copy in host memory (CPU / system RAM) and a copy that gets uploaded to the device (GPU / VRAM). There's work going on to get around this limitation but the current architectures we use aren't well-suited to it and the dream of ultra-fast/shared memory pools across an entire system is still a bit of a dream. It would be lovely to have a great big block of general [fast] memory available that the CPU and GPU can address quickly and independently without the inherent stalls and slooooow bus transfers that we currently have to deal with.

Yeah, you're right - it's not just the resolution of the primary display(s) that push VRAM requirements up - it's the heavyweight G-buffers that game engines use. Especially as the demand for increased fidelity, detail and realism increases. When the component parts of the G-buffers have to start increasing their resolutions to realise the sort of visuals that demanding consumers/gamers are after... well... you can probably imagine! As discerning gamers and techies, we know that jumping from a resolution of 1024x1024 to 2048x2048 isn't simply doubling the requirements for that specific component - it's 4x more. 4x more storage, 4x more data to move around a system, 4x more data to address, and potentially 4x more pixels (work) for the various shaders to work their way through. Of course, this is a very basic outline but memory usage can easily explode.

Memory management itself is a bit of a double-edged sword - older versions of, say, DirectX/3D did quite a lot to hide away the complexities of memory management from the developer. This used to be a blessing in some ways, yet ultimately we'd end up wishing we had finer control over how and when memory was allocated and used. With DX12 and Vulkan, it's really up to the developers to deal with, and it's both great and a f**king pain. Being able to have control over how your memory is managed is all well and good, but it's so easy to get it wrong. Sounds crazy, how difficult can it be to allocate, dish-out, claw-back, chunks of memory? You'd be surprised...! It doesn't take much to get it wrong and suddenly you're in a world of pain with stuttering, stalls, data not being ready when the renderer calls for it, and a million and one other issues. But it's part of the development process so it's up to the devs to get it right ultimately. Or, to get it right 17 patches later after release... 😜

Ray tracing (and path tracing) only serves to increase demand for VRAM. In addition to the game assets, the ray tracing hardware on the GPU needs some form of BVH in VRAM against which it can cast rays and run it's hit/miss shaders, etc. The BVH is effectively an optimised form of the world data (level, characters, effects, and so on) against which rays are traced and hit-tested. As it suggests, that's yet another significant chunk of memory needed for another copy (subset) of the world data - but specifically for the RT side of things. Admittedly, that's a somewhat simplified overview but it would get boring and TLDR; otherwise. LOLgpu.

Here's a quick example I've just run up on one of my dev PCs... a relatively simple scene but with hidden complexity. It's fully path traced and uses high-quality PBR assets. As a result, it's using up 22GB of VRAM and 30+GB of system RAM in total. FLOLIPOP. That's how you do it, boys, girls and thems. 👌 The CPU is hardly being utilised.

Er, not sure what else to say now. I can make more pretty pics? 😂 The red, green and blue textures for the curtains alone are 125MBs...

Ok, so this example is a bit extreme and not really indicative of what a current game would use... BUT... with the evolution of Unreal Engine 5 (and similar) this is what we are moving towards. The gap between "game" assets and "movie" quality assets is closing but the requirements to facilitate that closing of the gap are ballooning. Thank goodness for AI, DLSS, detail inference, ML, and all the other stuff that's going on.

Geddes

ClioSport Club Member

Fiesta Mk8 ST-3

Just got another blue screen of death this morning, this time i took a picture

Stop Code: Driver IRQL NOT LESS OR EQUEL

What Failed: NVLDDMKM.sys

just done 1 update on my pc and cant remember what it was i think it was to do with windows 10.

Also it said my pc isn't compatible with Windows 11

That desk top from post 6,692 looks really nice, i got mine from ikea too with the same drawers but different colour. the edge of the desk top is starting to crack in some places on the edge, only had it for a couple years so far

Stop Code: Driver IRQL NOT LESS OR EQUEL

What Failed: NVLDDMKM.sys

just done 1 update on my pc and cant remember what it was i think it was to do with windows 10.

Also it said my pc isn't compatible with Windows 11

That desk top from post 6,692 looks really nice, i got mine from ikea too with the same drawers but different colour. the edge of the desk top is starting to crack in some places on the edge, only had it for a couple years so far

Darren S

ClioSport Club Member

Out of interest - if your PC well ventilated, not next to a radiator and the graphics card itself free of dust and other debris?Just got another blue screen of death this morning, this time i took a picture

Stop Code: Driver IRQL NOT LESS OR EQUEL

What Failed: NVLDDMKM.sys

just done 1 update on my pc and cant remember what it was i think it was to do with windows 10.

Also it said my pc isn't compatible with Windows 11

That desk top from post 6,692 looks really nice, i got mine from ikea too with the same drawers but different colour. the edge of the desk top is starting to crack in some places on the edge, only had it for a couple years so far

SharkyUK

ClioSport Club Member

Darren S

ClioSport Club Member

That's plenty of time for build-up of dust, etc - even if the PC is used only sparingly. I used to take my PC into work typically twice a year and blast it out with an air compressor there.No the pc is completly standard, the computer and everything else is getting on over 6 years old now, i wouldn't know how to overclock either

These days I bought a specific IT duster and just use it at home.

Similar threads

- Replies

- 0

- Views

- 2K

- Replies

- 27

- Views

- 5K

- Replies

- 0

- Views

- 2K